Deep Drawing

Deep Drawing

Deep Drawing is a research project that employs AI to create a novel form of intermedia performance. Our system tracks the sound source location of an artist’s pen gliding across the surface of a drawing board. While focusing on these subtle, often ignored noises, I embrace the aesthetic value of AI’s misinterpretations and errors. The AI output, especially when it deviated from the human input, generated a productive aesthetic tension that led the humans to diverge from their original artistic intent. I invite humanity to reconsider the evolving role of AI as our companion in a positive, synergetic feedback loop, instead of oracles providing one-directional outputs to replace human ingenuity.

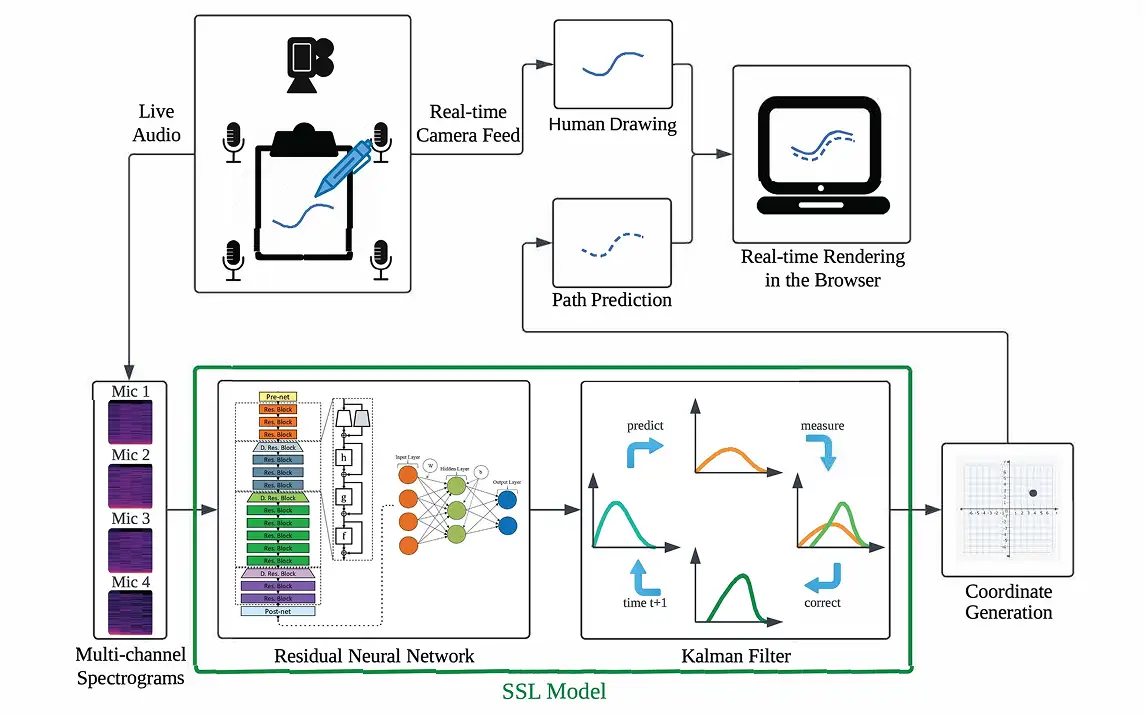

At the core of our system is a residual neural network and a Kalman filter, which I improved upon our previous convolutional neural network (CNN) design. The system captures sound from four contact microphones attached to the surface of the drawing board, generates spectrograms, and predicts the pen’s path. It renders its own calligraphic realization connecting these predictions, which are overlaid onto the video capture of the human drawing. Thereby, the system creates a real-time artistic dialogue on a shared digital canvas powered by React and Vite.

Working with Professors Julie Zhu and John Granzow at the University of Michigan, I am continuing the development of Deep Drawing with novel forms of data preprocessing, data augmentation, and neural network architectures.

Deep Drawing is supported by the Performing Arts Technology Department and the ADVANCE program at the University of Michigan.

.svg)